How to Stream AI Responses in JavaScript (ChatGPT & Claude)

November 1, 2025

Streaming AI responses allows you to display tokens as they're generated, creating a better user experience with real-time feedback instead of waiting for the complete response.

Stream Responses from OpenAI (ChatGPT)

Set stream: true in your API request and process the Server-Sent Events (SSE) response.

1.async function streamOpenAI(prompt) {2. const response = await fetch('https://api.openai.com/v1/chat/completions', {3. method: 'POST',4. headers: {5. 'Content-Type': 'application/json',6. 'Authorization': `Bearer ${YOUR_OPENAI_API_KEY}`7. },8. body: JSON.stringify({9. model: 'gpt-3.5-turbo',10. messages: [{ role: 'user', content: prompt }],11. stream: true12. })13. });14.15. const reader = response.body.getReader();16. const decoder = new TextDecoder();17.18. while (true) {19. const { done, value } = await reader.read();20. if (done) break;21.22. // Decode the chunk23. const chunk = decoder.decode(value);24. const lines = chunk.split('\n').filter(line => line.trim() !== '');25.26. for (const line of lines) {27. if (line.startsWith('data: ')) {28. const data = line.slice(6);29. if (data === '[DONE]') continue;30.31. try {32. const parsed = JSON.parse(data);33. const content = parsed.choices[0]?.delta?.content || '';34.35. // Display each token as it arrives36. if (content) {37. console.log(content);38. // Or: append to DOM element39. }40. } catch (e) {41. // Skip malformed JSON42. }43. }44. }45. }46.}

Stream Responses from Claude (Anthropic)

Claude uses a similar streaming format but with a different response structure. Look for content_block_delta events.

1.async function streamClaude(prompt) {2. const response = await fetch('https://api.anthropic.com/v1/messages', {3. method: 'POST',4. headers: {5. 'Content-Type': 'application/json',6. 'x-api-key': YOUR_ANTHROPIC_API_KEY,7. 'anthropic-version': '2023-06-01'8. },9. body: JSON.stringify({10. model: 'claude-3-haiku-20240307',11. max_tokens: 1024,12. messages: [{ role: 'user', content: prompt }],13. stream: true14. })15. });16.17. const reader = response.body.getReader();18. const decoder = new TextDecoder();19.20. while (true) {21. const { done, value } = await reader.read();22. if (done) break;23.24. const chunk = decoder.decode(value);25. const lines = chunk.split('\n').filter(line => line.trim() !== '');26.27. for (const line of lines) {28. if (line.startsWith('data: ')) {29. const data = line.slice(6);30.31. try {32. const parsed = JSON.parse(data);33.34. // Claude sends content in content_block_delta events35. if (parsed.type === 'content_block_delta') {36. const content = parsed.delta?.text || '';37.38. if (content) {39. console.log(content);40. // Or: append to DOM element41. }42. }43. } catch (e) {44. // Skip malformed JSON45. }46. }47. }48. }49.}

Display Streaming Responses in the Browser

Append each token to a DOM element as it arrives for a typewriter effect.

1.const outputElement = document.getElementById('ai-output');2.let fullResponse = '';3.4.async function displayStreamingResponse(prompt) {5. outputElement.textContent = ''; // Clear previous6. fullResponse = '';7.8. const response = await fetch('https://api.openai.com/v1/chat/completions', {9. method: 'POST',10. headers: {11. 'Content-Type': 'application/json',12. 'Authorization': `Bearer ${YOUR_OPENAI_API_KEY}`13. },14. body: JSON.stringify({15. model: 'gpt-3.5-turbo',16. messages: [{ role: 'user', content: prompt }],17. stream: true18. })19. });20.21. const reader = response.body.getReader();22. const decoder = new TextDecoder();23.24. while (true) {25. const { done, value } = await reader.read();26. if (done) break;27.28. const chunk = decoder.decode(value);29. const lines = chunk.split('\n').filter(line => line.trim() !== '');30.31. for (const line of lines) {32. if (line.startsWith('data: ')) {33. const data = line.slice(6);34. if (data === '[DONE]') continue;35.36. try {37. const parsed = JSON.parse(data);38. const content = parsed.choices[0]?.delta?.content || '';39.40. if (content) {41. fullResponse += content;42. outputElement.textContent = fullResponse;43. }44. } catch (e) {45. // Skip malformed JSON46. }47. }48. }49. }50.51. return fullResponse;52.}

Stream AI Responses in React

Use state to accumulate and display streaming tokens in a React component.

1.import { useState } from 'react';2.3.function StreamingChatbot() {4. const [response, setResponse] = useState('');5. const [isStreaming, setIsStreaming] = useState(false);6.7. async function handleSubmit(prompt) {8. setResponse('');9. setIsStreaming(true);10.11. try {12. const res = await fetch('https://api.openai.com/v1/chat/completions', {13. method: 'POST',14. headers: {15. 'Content-Type': 'application/json',16. 'Authorization': `Bearer ${YOUR_OPENAI_API_KEY}`17. },18. body: JSON.stringify({19. model: 'gpt-3.5-turbo',20. messages: [{ role: 'user', content: prompt }],21. stream: true22. })23. });24.25. const reader = res.body.getReader();26. const decoder = new TextDecoder();27. let accumulated = '';28.29. while (true) {30. const { done, value } = await reader.read();31. if (done) break;32.33. const chunk = decoder.decode(value);34. const lines = chunk.split('\n').filter(line => line.trim() !== '');35.36. for (const line of lines) {37. if (line.startsWith('data: ')) {38. const data = line.slice(6);39. if (data === '[DONE]') continue;40.41. try {42. const parsed = JSON.parse(data);43. const content = parsed.choices[0]?.delta?.content || '';44.45. if (content) {46. accumulated += content;47. setResponse(accumulated);48. }49. } catch (e) {}50. }51. }52. }53. } finally {54. setIsStreaming(false);55. }56. }57.58. return (59. <div>60. <button onClick={() => handleSubmit('Tell me a joke')} disabled={isStreaming}>61. {isStreaming ? 'Streaming...' : 'Send'}62. </button>63. <div>{response}</div>64. </div>65. );66.}

Handle Streaming Errors

Wrap streaming logic in try-catch blocks and handle network interruptions gracefully.

1.async function streamWithErrorHandling(prompt) {2. try {3. const response = await fetch('https://api.openai.com/v1/chat/completions', {4. method: 'POST',5. headers: {6. 'Content-Type': 'application/json',7. 'Authorization': `Bearer ${YOUR_OPENAI_API_KEY}`8. },9. body: JSON.stringify({10. model: 'gpt-3.5-turbo',11. messages: [{ role: 'user', content: prompt }],12. stream: true13. })14. });15.16. if (!response.ok) {17. const error = await response.json();18. throw new Error(`API Error: ${error.error?.message || response.statusText}`);19. }20.21. const reader = response.body.getReader();22. const decoder = new TextDecoder();23.24. while (true) {25. const { done, value } = await reader.read();26. if (done) break;27.28. const chunk = decoder.decode(value);29. const lines = chunk.split('\n').filter(line => line.trim() !== '');30.31. for (const line of lines) {32. if (line.startsWith('data: ')) {33. const data = line.slice(6);34. if (data === '[DONE]') continue;35.36. try {37. const parsed = JSON.parse(data);38. const content = parsed.choices[0]?.delta?.content || '';39.40. if (content) {41. console.log(content);42. }43. } catch (e) {44. console.warn('Failed to parse chunk:', e);45. }46. }47. }48. }49. } catch (error) {50. console.error('Streaming failed:', error);51. // Display error to user52. throw error;53. }54.}

Key Differences Between OpenAI and Claude Streaming

Both APIs use Server-Sent Events (SSE) but have different response formats:

- OpenAI: Content in

choices[0].delta.content, ends with[DONE] - Claude: Content in

delta.textwithincontent_block_deltaevents - Headers: OpenAI uses

Authorization, Claude usesx-api-key

More JavaScript Snippets

Popular Articles

I Can't Believe It's Not CSS: Styling Websites with SQL

Style websites using SQL instead of CSS. Database migrations for your styles. Because CSS is the wrong kind of declarative.

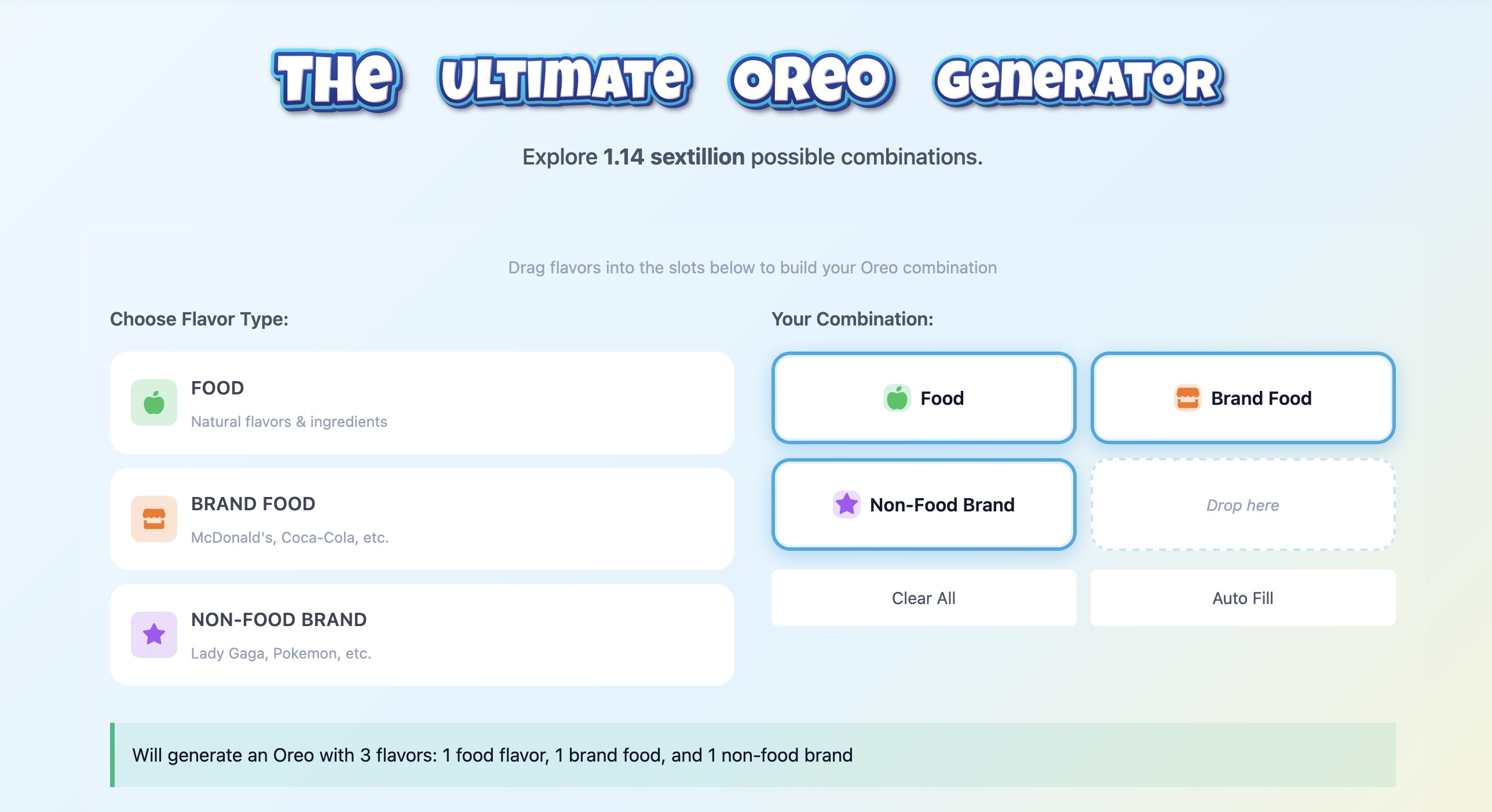

How I Built an Oreo Generator with 1.1 Sextillion Combinations

Building a web app that generates 1,140,145,285,551,550,231,122 possible Oreo flavor combinations using NestJS and TypeScript.

AI Model Names Are The Worst (tier list)

A comprehensive ranking of every major AI model name, from the elegant to the unhinged. Because apparently naming things is the hardest problem in AI.